New update in our blog by our Data Scientist Gabriel Garau.

This time we present a dynamic programming application to solve the Fibonacci series.

Dynamic programming (DP) is a computer programming method which consists in breaking down a complex problem into smaller problems, usually in a recursive way. Then, these problems are solved one at a time, typically storing the already computed results so as to avoid computing them more than once.

This simple example illustrates the importance of choosing an efficient way to tackle a problem, even when there are different alternatives yielding the same result. In this case, we saw that by just storing these values using a dynamic programming approach, overhead is dramatically reduced, especially as the number of nested calculations increases.

You can read the full article below:

Demand forecasting and dynamic pricing

At Damavis, we present our hotel demand forecasting model and a dynamic pricing strategy in order to maximize the expected profits. Soon, the article will be available also in english.

The structure of the paper is organized by sections. In section 2 we describe the data set used. In section 3, we present the model for forecasting future demand, based on time series. In section 4, we explain how the above prediction is used to generate possible future scenarios through Monte Carlo simulations. In section 5, we study the effect of price on demand from historical data.

These results will be used in section 6 to calculate the optimal price on the simulations generated in section 4. In section 7 we present the results obtained in each of the previous sections. Finally, in section 8 we summarize the work done and propose possible lines of improvement.

You can read the full article below:

Job offer

We are expanding our data engineers team! Our most important values are the quality of our team and the service offered, so we attach great importance to the people who belong to the team.

It is just as important that the requirements are met on the part of the candidates as on our part. So before we tell you what we are looking for, let us tell you what we offer:

- Work with a team of great professionals with years of experience and experts in Big Data and Artificial Intelligence.

- Remote work and work conciliation.

- Continuous training with official qualifications.

- Accompaniment by our Data Engineer Heads during the first weeks (or as long as required) to achieve a total adaptation to the work and production environment.

- Daily meetings to resolve doubts and queries, as well as to keep up to date with everything that happens in the different work teams.

- New work teams and projects from time to time in order to acquire new experiences and face new challenges.

- Salary according to the knowledge and responsibilities that are being acquired.

Some of the most important values in Damavis:

- Teamwork

- Quality

- Knowledge

- Assertiveness

- Effectiveness

- Efficiency

- Sustainability

And now, if you think we are the right place for you, we will tell you what we are looking for in our new Data Engineer: JOB OFFER

Seen on networks

During the week we share the most interesting news from the world of big data and artificial intelligence on our social networks: Twitter, Facebook, Instagram and Linkedin

Scala 3 is here

After 8 years of work, 28,000 commits, 7,400 pull requests, 4,100 closed issues – Scala 3 is finally out. Since the first commit on December 6th 2012, more than a hundred people have contributed to the project.

Today, Scala 3 incorporates the latest research in type theory as well as the industry experience of Scala 2. It has been seen what worked well (or not so well) for the community in Scala 2. Based on this experience the third iteration of Scala is created – easy to use, learn, and scale.

Here is the link to Git: Scala 3

DELPHI, an artificial intelligence framework that predict high-impact research

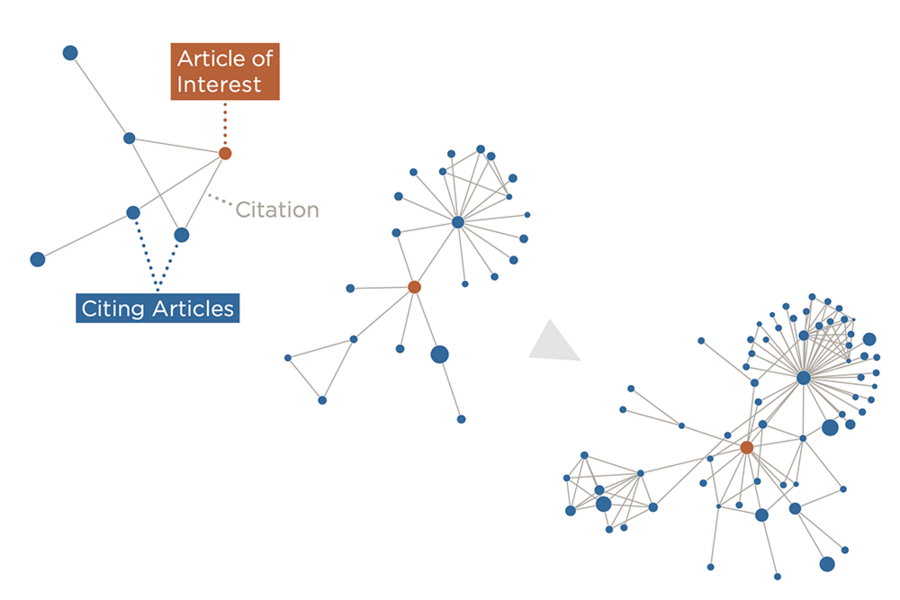

MIT researchers have designed a machine learning framework that, by computing on the historical scientific research graph, is able to predict the future impact of scientific research.

The researchers see DELPHI as a tool that can help humans better leverage funding for scientific research, identifying “diamond in the rough” technologies that might otherwise languish and offering a way for governments, philanthropies, and venture capital firms to more efficiently and productively support science.

Here is the link to the full article: Using Machine Learning To Predict High-Impact Research

Google I/O 2021: Being helpful in moments that matter

Google I/O 2021, for the first time completely free and virtual, kicked off this week, leaving us with a lot of news about Android and other Google products.

And so far, the summary of week 20 of this 2021. We invite you to share this article with your contacts. See you in networks!

Att, Damavis