CUDA is the language used for programming on NVIDIA GPUs, it is vital for a large number of computing tasks, and yet it is a mystery to a large number of programmers. In this post, we attempt to explain some of the mystery of CUDA and help you understand the special paradigm it requires.

What is CUDA?

As a language, CUDA’s syntax is extremely similar to C, and you would think that if you knew how to program C, you could program CUDA without any difficulty. But if one were to try to run a code programmed for CPU on GPU, we observe that it is several magnitudes slower than just running it on CPU. This phenomenon is strange, as we would expect the full power of the GPU to make the program faster, but a single thread on a CPU would be considerably faster.

This paradox is due to the change of architecture. The vast majority of programs are written for an architecture that assumes that there are one or several threads and each one executes its given code, often not even being the same code in each thread. This architecture is known as SISD (Single Instruction Single Data) or SIMD (Single Instruction Multiple Data), depending on the instruction set the CPU has, which means that in a CPU a single instruction usually handles one or more data.

Each thread has its own CPU core associated with its own program counter (register that stores the next instruction to be executed in the CPU). Two threads do not share program counters, even if they would execute the same code, they would do so with different program counters.

CUDA architecture: Streaming Multiprocessor

However, this is not the case with CUDA. Here we have that the smallest processing unit is not the core, it is the SM (Streaming Multiprocessor). An SM has a single program counter, but it does not have a single core. Whereas in the CPU we would see one program counter per core, in a GPU we see multiple cores per SM, that is, multiple cores can only perform the execution dictated by the program counter they share.

For example, we have in the code a simple if. In this case, the SM would ‘shut down’ the cores that do not pass the if and only execute those that do. Once finished, it would execute those that did not pass, since having a single program counter forces only one thread of instructions to be followed at a time. This architecture is called SIMT (Single Instruction Multiple Threads).

This type of architecture is not used for its own sake, but because, natively, it allows for highly parallel code execution. In fact, that is the only way to run the code. If the code did not benefit from this architecture, the GPU would perform very poorly.

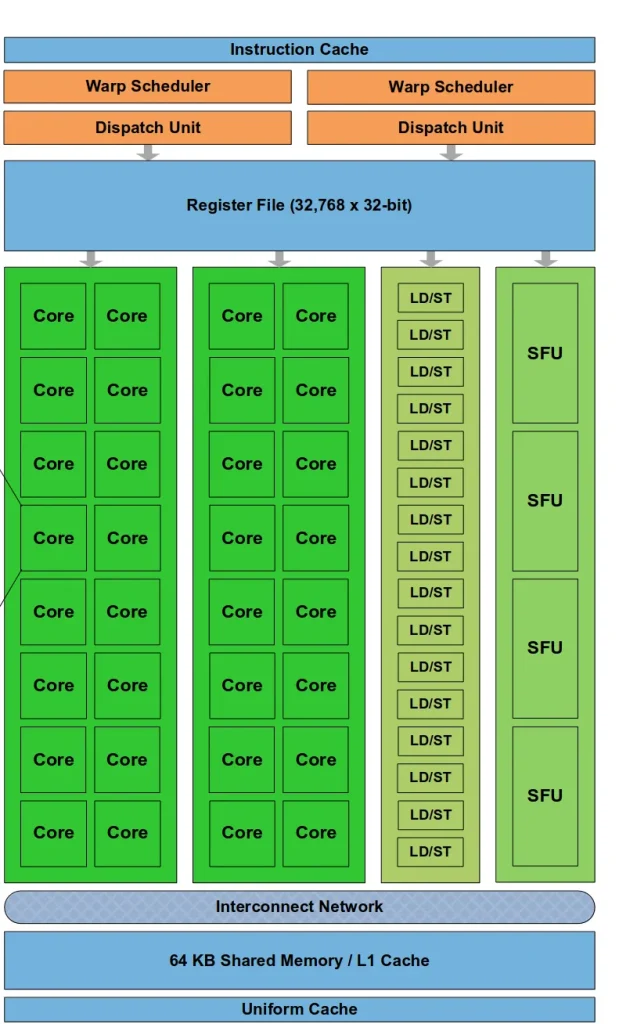

Example of CUDA FERMI architecture

In the following image we show the architecture of an SM, specifically FERMI’s CUDA architecture. We can see how the warp has a file register (where the variables declared in the code are stored), an L1 cache (which we usually call shared memory and is mainly and directly managed by the code itself), an interconnection network (which allows several threads inside a warp to talk to each other) and several cores.

Each core is assigned a thread and, as we can see, in this specific architecture there are two sets of 16 cores, being able to split the warp into something called half-warp. This is mainly due to backwards compatibility with pre-FERMI architectures, where warps were 16-core. It also has 16 LD/ST (load/store units), allowing 16 threads to read and write to memory at the same time. And finally, 4 SFU (Special Function Unit) units that offer the possibility of executing special functions such as cos, sin or tan, among others.

Threads, Blocks and Grids

Because of this architecture, it is necessary to create a hierarchy to separate threads from each other. To do this, a 3-level system is used: first we have the threads, then the thread blocks and finally the grid.

This hierarchy indicates which resources the threads can share. For example, threads within the same warp can share everything using the interconnect network, but threads within the same thread block can only share shared memory (a type of user-controlled cache), while threads in different blocks do not share anything.

While this hierarchy is interesting, in this post we will focus on the lowest level of the hierarchy, the warps. These are the minimum unit of execution, that is, when threads are executed, they are executed inside a warp and a warp is executed, in turn, in a SM.

What is a Warp in CUDA?

A warp currently consists of 32 threads, although in older GPU architectures we can see warps of 16 threads. These 32 threads share a single program counter, which means that they execute the same instruction at the same time.

__global__ void sum(float *a)

{

__shared__ float shared_mem[32];

int idx = threadIdx.x;

shared_mem[idx] = a[idx];

for(int threshold=16; idx<threshold; threshold>>=1)

{ shared_mem[idx]+=shared_mem[idx+threshold]; }

if (idx==0) {

a[0]=shared_mem[0];

}

}This program is simple, but we can already see the complexity as we have several elements that discriminate by the ID of the thread, which we get with threadIdx.x. At the beginning, the 32 threads work, then 16… and finally 1. But the code is the same for all of them, requiring this discrimination by ID.

Shared memory and global memory

As you can see from the __shared__ identifier, the data is first sent to shared memory. While you could do everything from global memory, it is preferable to do it from shared memory due to the inefficiency of operating in global memory, so while we won’t explain much about shared memory in this post, we will make use of it for best practices.

After sending the data to shared memory, it simply does a reduction where each thread adds 2 elements and halves the number of threads working until there is only 1 left and finishes, writing the result back to global memory.

Now that we see the program for 32 threads we can extend it to 256 threads including multiple warps.

__global__ void sum(float *a)

{

__shared__ float shared_mem[256];

int idx = threadIdx.x;

shared_mem[idx] = a[idx];

for(int threshold=128; idx<threshold; threshold>>=1) {

shared_mem[idx]+=shared_mem[idx+threshold];

}

if (idx==0) {

a[0]=shared_mem[0];

}

}The code is essentially the same, just the shared memory is now 256 floats, and the threshold is 128, but this code is no longer correct. While it is true that it will usually give the expected result, it sometimes makes mistakes.

Automatic synchronization in CUDA

In CUDA there is normally no automatic synchronisation. Now that we have 256 threads, there are 8 warps working simultaneously and they have different program counters, so one may get ahead of the others and not wait. For example, if the warp of the first 32 threads were to go ahead, the result would only be the sum of the first 32 elements.

For this, there are synchronisation primitive functions, as we can see in the following code.

__global__ void sum_synchronized(float *a)

{

__shared__ float shared_mem[256];

int idx = threadIdx.x;

shared_mem[idx] = a[idx];

__syncthreads();

for(int threshold=128; idx<threshold; threshold>>=1) {

shared_mem[idx]+=shared_mem[idx+threshold];

__syncthreads();

}

if (idx==0) {

a[0]=shared_mem[0];

}

}The script is basically the same, except that we call __syncthreads() every time we want to make sure that no warp diverges. This function ensures that every warp that belongs to the same thread block will be in the same instruction. This synchronisation is only at the thread block level, but in this article we won’t go into detail to describe it, it is only necessary to know that this code is contained in a single thread block.

Syncrhonization functions

Now we have a functional but quite inefficient code, since we are passing data to a shared memory and then operating on it, when we have seen in the architecture of an SM, that there is something called ‘interconnect network’. To implement it, we can make use of certain primitives at the warp level as shown in the following code.

__global__ void sum_synchronized(float *a)

{

int idx = threadIdx.x;

float val = a[idx];

for (int offset = 16; offset > 0; offset /= 2) {

val += __shfl_down_sync(0xffffffff, val, offset);

}

if(idx==0) {

a[0]=val;

}

}The function used is __shfl_down_sync(synchronization_mask, val, offset), which adds the ‘val’ variable of two different threads located at an ‘offset’ between them and forces the synchronisation of the threads given a mask. For example, in the code we use 0xffffffffffff, which indicates to synchronise every thread inside the warp. Cases of dyssynchrony would be rare. This primitive allows us to skip using shared memory, and we can directly add the values by adding their variables together.

Example of syncrhonization functions

Finally, we can apply these warp primitives to make the 256-element example efficient:

__global__ void sum_synchronized(float *a)

{

int idx = threadIdx.x;

float val = a[idx];

__shared__ float shared_mem[8];

for (int offset = 16; offset > 0; offset /= 2) {

val += __shfl_down_sync(0xffffffff, val, offset);

}

if(idx%32==0) {

shared_mem[idx>>5]=val;

}

__syncthreads();

if (idx<=8) {

val=shared_mem[idx];

val += __shfl_down_sync(0xffffffff, val, 4);

val += __shfl_down_sync(0xffffffff, val, 2);

val += __shfl_down_sync(0xffffffff, val, 1);

if (idx==0) {

a[0]=val;

}

}

}We do the warp sum as before, but here something interesting happens. Once we have added by warp, we have the problem that there are 8 concurrent warps and we have to share information between them. In particular, a single value for each one. To do this, we reserve 8 spaces in shared memory and, for each warp, the first thread writes the sum to shared memory. Then we run __syncthreads(), since we may have a dyssynchrony. Finally, we use the first warp to sum those 8 elements by 1 and write the result.

Conclusion

GPU architecture, and especially CUDA, is very different from what we imagine when we program on CPUs. For this reason, we need to think about alternatives when performing this task on GPUs.

In particular, we need to focus on the thread paradigm. The code is divided into threads, but every thread executes exactly the same code, which forces us to identify each thread with an ID and assign a part of the work following that ID.

In addition, we must take into account that we do not have synchronisation mechanisms and that any communication above the level of a warp will require mechanisms to synchronise, such as __syncthreads(), which synchronises at the thread block level.

Despite this, this paradigm is important, as it allows us to run highly parallel code, allowing tasks that can take days on CPUs to run on GPUs in minutes.

And that is all! If you found this post interesting, we encourage you to visit the Algorithms category to see all the related posts and to share it on social networks. See you soon!