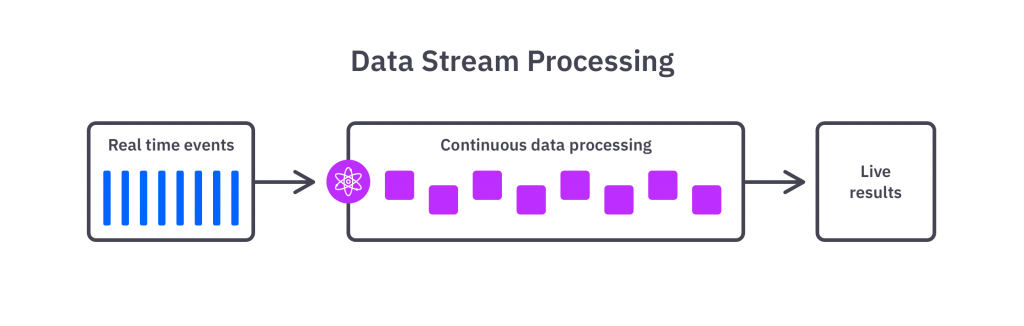

In recent years, data processing with low latency, practically in real time, is becoming a requirement increasingly demanded by companies in their big data processes. It is in this context where the concept of stream processing is introduced, which refers to the set of methodologies used to process data continuously to achieve low latencies at the time we have the data.

In this post we are going to delve into Structured Streaming, the high-level API provided by Apache Spark for processing massive data streams in near real time. For this, we will see what are the possibilities and limitations of this API, delving a little into its basic concepts, and giving the necessary theoretical tools so you can consider the implementation of your first ETL in streaming.

Structured Streaming deep dive

Structured Streaming has been with us since the release of Spark version 2.2, and provides a high-level production-ready interface for defining new pipelines and adapting existing ones to stream processing within the Apache Spark framework.

One of its strengths lies in the fact that it uses the same operations that we use for processing data in classic batch, making the transition to a streaming context of previously developed batch processes very transparent and simple, and allowing us to extract all the value offered by streaming computing without the need to add or modify large amounts of code. This allows us to use practically the same code to process the data in batches and in stream, which facilitates the development of this type of applications by not having to maintain two different versions of the code for the same processing pipeline in two contexts that are so closely linked.

Prior to this API, Apache Spark already had tools to support streaming data processing thanks to its Spark Streaming interface and its DStreams API.

These types of tools have been used by multiple organizations to move their applications to a real-time context, allowing Spark to integrate with this streaming data processing paradigm. However, these interfaces are based on relatively low-level operations and concepts, which make development and adaptation to this framework difficult, as well as complicating the optimization of high-level processing pipelines.

This is exactly why there is a need for an interface closely adapted to Spark’s DataFrames and Datasets API, allowing a simpler and more optimized integration for the development of streaming applications. Unlike what happens with DStreams, once we implement a processing pipeline for data streams, Structured Streaming transparently takes care of optimizing the entire procedure underneath according to the transformations performed, in the same way that Spark optimizes the execution plans.

Structured Streaming key concepts

Once we have an introduction to Structured Streaming in Spark and which are the use cases that motivate it, let’s review the main concepts on which it is based, analyzing which ends must be tied to be able to use this API in practice.

Input Data Sources

We can define an input data source as an abstraction representing an entity that provides a continuous flow of data over time that can be consumed by our process. Below are some input data sources available with Structured Streaming:

- Files: Structured Streaming allows direct ingest of file data into a file storage system, processing as a data stream those files that are added or modified under a specific directory. Among the file formats that Structured Streaming supports, we can observe the most common ones such as JSON, CSV, ORC or Parquet.

- Apache Kafka: This technology is widely known for providing a distributed message queue that is capable of decoupling those entities that produce data from those that consume it. Given its relevance in the streaming data world, Structured Streaming provides an interface to consume a stream of events coming from a Kafka queue.

- TCP Socket: Another possibility is to consume a text-based data stream by connecting to a TCP server via a socket. In order to use this alternative, the data stream must be UTF-8 encoded.

Data transformations

As we have previously mentioned, the transformations in a streaming context with Spark are practically identical to the transformations that we would carry out in a batch context, since the Spark API is internally responsible for handling the data and applying the corresponding transformations regardless of whether we are working in streaming or batch.

Despite this, there are certain limitations or considerations that arise when applying some transformations in streaming contexts, such as in the case of aggregations or data joins. On the other hand, there are also some operations that do not make sense when working with data flows without beginning or end, such as data sorting by means of sorting operations, and that by default are not allowed when working in streaming.

In the following, we will see different types of transformations, with special emphasis on their limitations and implications when working with Structured Streaming.

The first group of transformations to be discussed is that made up of data filtering and selection processes, such as filter, select or where operations. All these types of operations are supported without limitations in Structured Streaming, filtering the data in exactly the same way as they would in batch format.

The grouping of data to obtain metrics makes up a set of well-supported operations when working with streaming data. These types of operations do not present any type of limitation, although they can have important implications that can have repercussions on your streaming application, since in the case that you perform an aggregation by a field Spark will store an internal state of the data with the intermediate results to be able to maintain an aggregation as data enters in streaming. Spark internally handles all the complexity related to this internal state, maintaining and updating this intermediate structure to ensure that your aggregations remain consistent in memory with all the data processed by your ETL.

Additionally, Spark supports aggregations that are specific to streaming data processing, such as groupings by time windows, giving you tools to handle or discard data that comes in late.

Finally, joining data by means of join or merge operations is supported with certain limitations when processing streaming data flows, both for the scenario in which you want to join a static dataset to a streaming dataset and for joining two different streaming datasets. The existing limitations for each of these two scenarios will be discussed below:

- Stream-Static: In the case where an operation of type join of a streaming DataFrame (left part of the join) with a static DataFrame (right part of the join) is intended to be performed, operations of type “inner”, “left outer” and “left semi” are supported. The operations of type “right outer” and “full outer” are not supported for this scenario and will throw an error in case they are tried to be used in this context.

- Stream-Stream: In the second scenario in which it is intended to make a join operation of two different streaming DataFrames, all types of operations are supported, but with limitations. These limitations are based on the fact that at least one of the two sets (which depends on the particular type of join operation) must have specified temporal constraints for the data join to be possible, such as making explicit how long we keep in the internal state of the application a data waiting for its counterpart in another dataset to arrive.

Data Sinks and Write modes

Once the data has been acquired from a source and subsequently processed, it is necessary to define what we want to do with it. It is at this point where the concept of data sink appears, which is nothing more than the abstraction we use to represent the external system to which we want to move or publish the processed data. This sink will work as a kind of adapter that will define where the output data of the data stream will be written, making sure that the data arrives properly to its destination in a robust and error resilient way. Structured Streaming supports several interesting types of sinks:

- File Sink: This type of sink is the simplest of all, as it simply indicates that the output of the stream will go to a particular directory of a specified file system, writing the new processed data in the form of new files to it. Like the file-based data source, this type of sink supports several common formats such as JSON, parquet, CSV or plain text.

- Apache Kafka Sink: Writing the output data as a new event published in a Kafka queue can be very interesting to integrate the results of your process with other streaming processes that coexist in your ecosystem. This type of sink facilitates this task, publishing the output of your streaming process in a queue in a transparent and simple way.

- In-memory Sink: This sink creates a temporary table in memory that allows queries to be performed to obtain real-time results on the processed data. This type of sink, unlike the previous ones, does not have recovery in case of possible failures, and therefore can trigger a permanent loss of the processed data.

- Console Sink: Displays on screen the processed data in streaming, which is especially useful to carry out development tasks or to search for errors.

Both the in-memory sink and the console sink play a role relegated to experimentation and development, and it is not recommended that they be used in a productive environment due to their lack of robustness and the possibility of permanent data loss. As with data sources, there are different connectors to be able to use various third-party technologies as sinks that are not included by default in Structured Streaming.

In order to be able to write or publish the output of your ETL in streaming it is also necessary to define the output mode with which the data stream will be processed. Currently there are three types of output modes supported by Structured Streaming:

- Append mode: This mode is the default mode, and simply consists of consecutively appending new records to the output result from the processed streaming data, ensuring that each processed record is produced as output only once. This type of output mode does not make sense and is not supported when in the processing of the streamed data there are aggregations that force Spark to maintain an internal state with intermediate data that can be updated.

- Complete mode: The complete mode will produce as output the entire result of the data processed so far to update the output in the sink. This type of output mode is designed for scenarios in which data processing involves aggregations that require maintaining an internal state of the processed data, which must be completely updated in the output every time new data arrives.

- Update mode: This third output mode is very similar to the full mode, only that it will only produce as output those records of the result that are different with respect to the previous outputs produced by the pipeline. This type of output mode limits the sinks that you can use to persist your results, since such a sink must support record-level updates in order to use this mode. In the scenario where the data processing does not contain aggregations, this output mode is equivalent to using the previously explained addition mode.

Finally, regarding the output of the processed data, there is an additional configuration of our data sink that allows to control how often to process the next mini-batch of data once an iteration of streaming processing has finished, the trigger. Normally, the most used trigger is the one based on the processing time, and specifies how much processing time we want to leave between mini-batch and mini-batch of our streaming processing. If this parameter is not specified, Spark will by default proceed to process the next mini-batch of data as soon as it finishes processing the previous one, as soon as it can.

Conclusion

In this article, we have introduced the Structured Streaming API of Spark, which allows us to implement a streaming ETL in a simple and effective way. We have seen the most relevant concepts to master about this interface, giving the tools at a theoretical level so that any data engineer can launch to develop his first pipeline to process data streams in real time.

And that’s all! If you found this article interesting, we encourage you to visit the Data Engineering category to see all related posts and to share it on networks. See you soon!