Recently, Airflow version 3.0.0 was officially released, and with it comes several new features in the implemented functionalities and the graphical interface. In this post, we will discuss the most interesting changes.

DAGs versioning

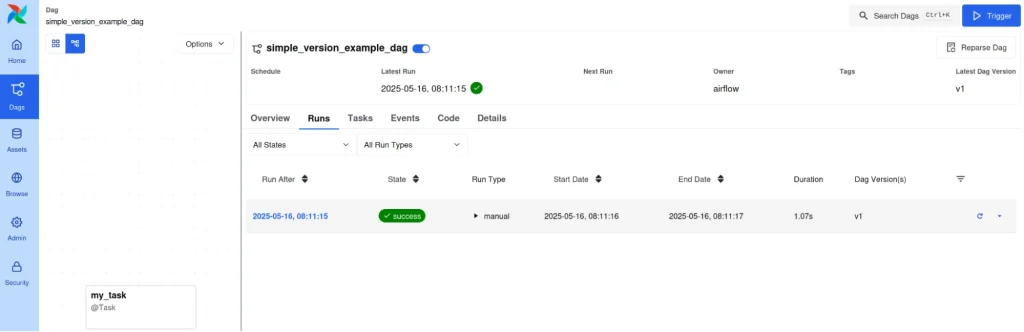

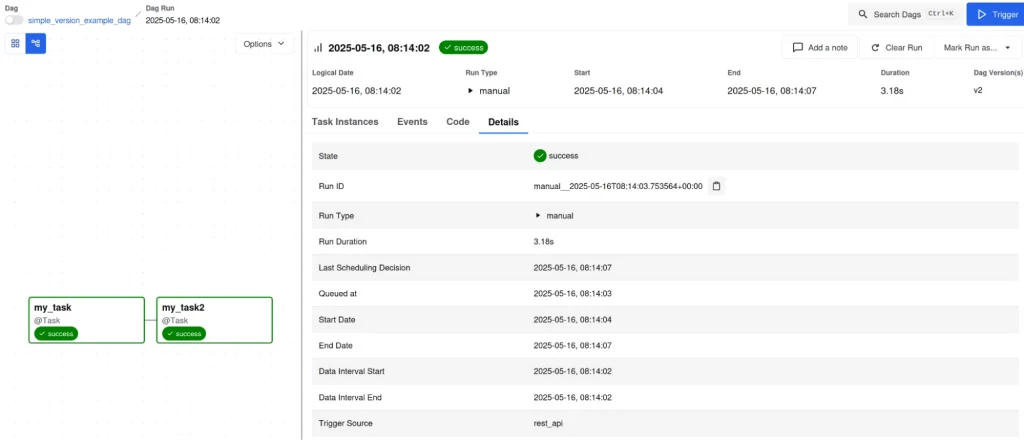

One of the new features of Airflow 3.0 is the versioning of DAGs. To observe this functionality, we create a simple DAG, run it and we will see the following:

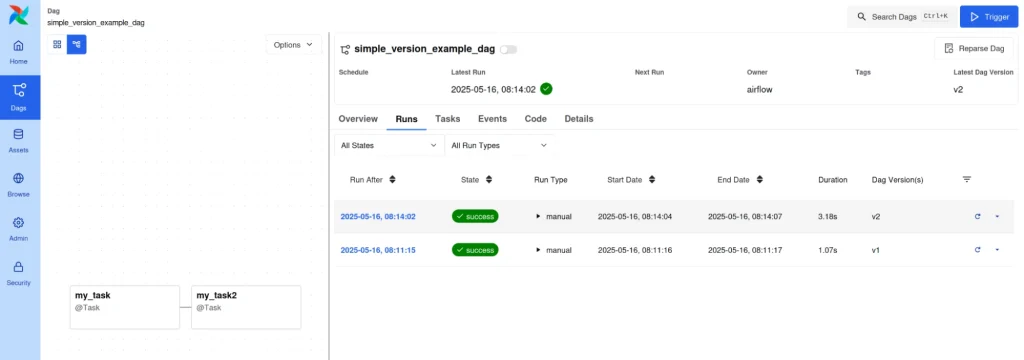

In the information of the executed DAGs, we observe the version of the DAG that was used for that DAG run. If we now modify that single DAG, save it and run it again, we will get:

As you can see, we have a new DAG run but with a different version. In addition, once a DAG run is launched, Airflow repairs the DAG and creates a new version. It does not alter the DAG run, running the version it started with. In this way, version control is greatly simplified and adds a layer of auditing that previously could not be obtained natively.

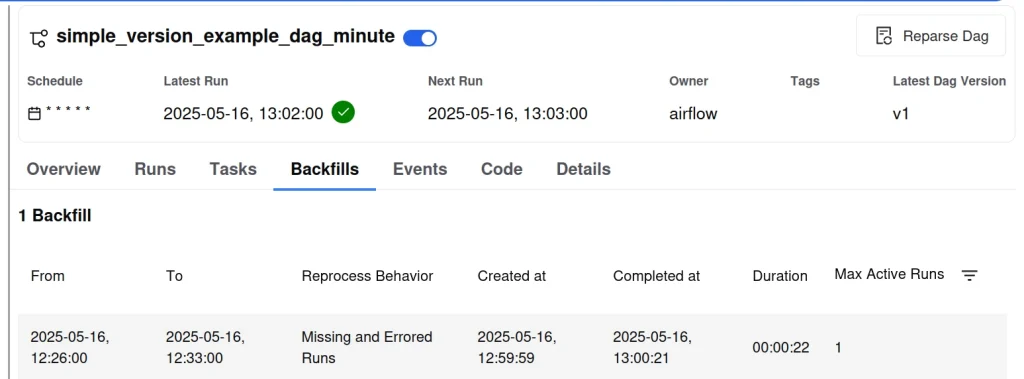

Backfills in Airflow 3.0

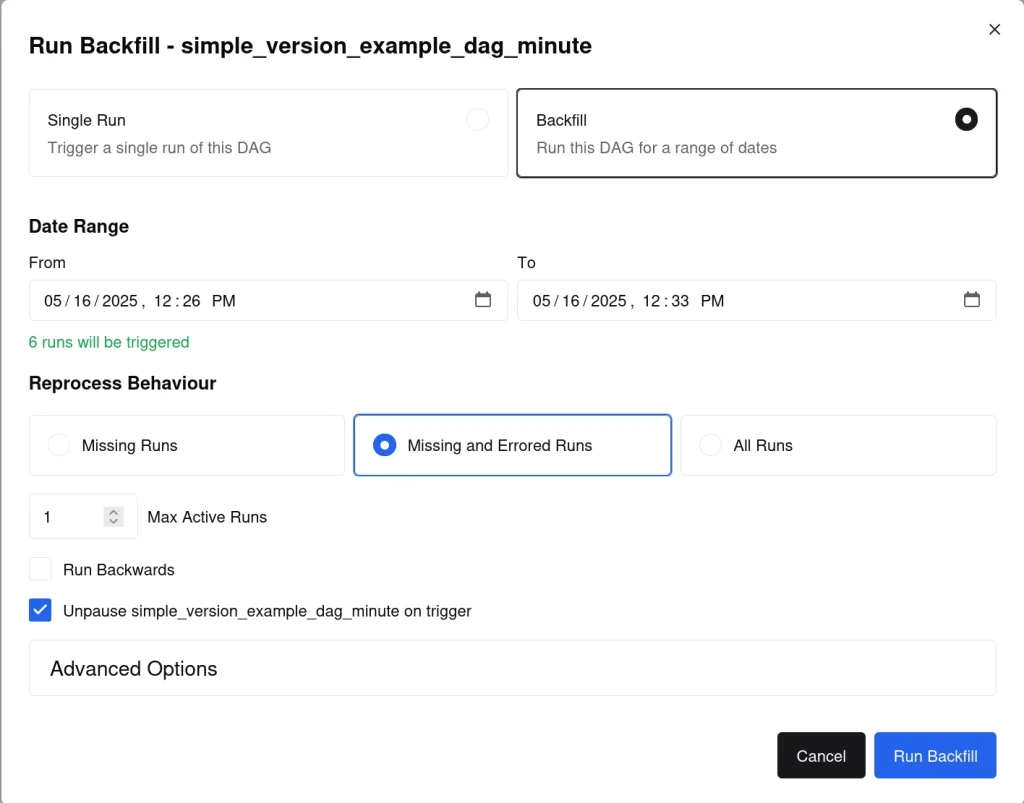

It is now possible to execute backfills from the CLI and the graphical interface. Backfills behave as if they were also DAGs and allow re-executing past periods with ease.

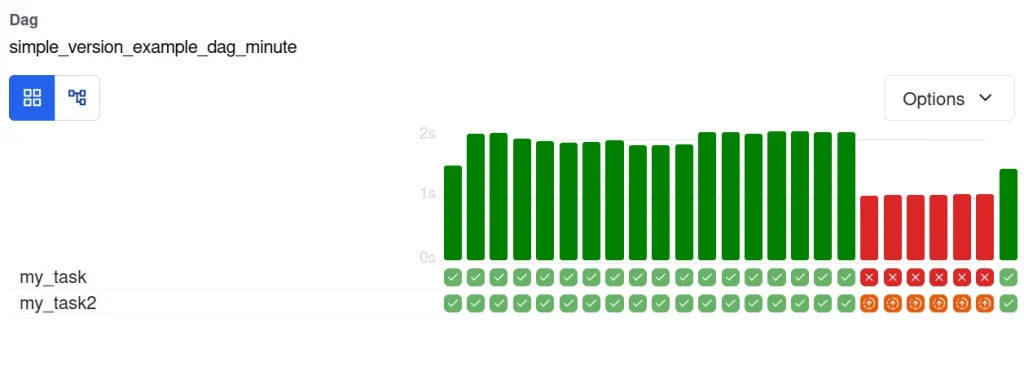

In this case, we have a DAG with a period of errors and we want to reprocess these errors. To do this, we make use of the backfill.

We indicate the time interval we want the backfill to process and add the configuration that only processes the DAG runs that failed.

Once the backfill is executed, we can see that the DAGs are now reprocessed without fail.

In addition, the backfill is saved, thus facilitating auditing.

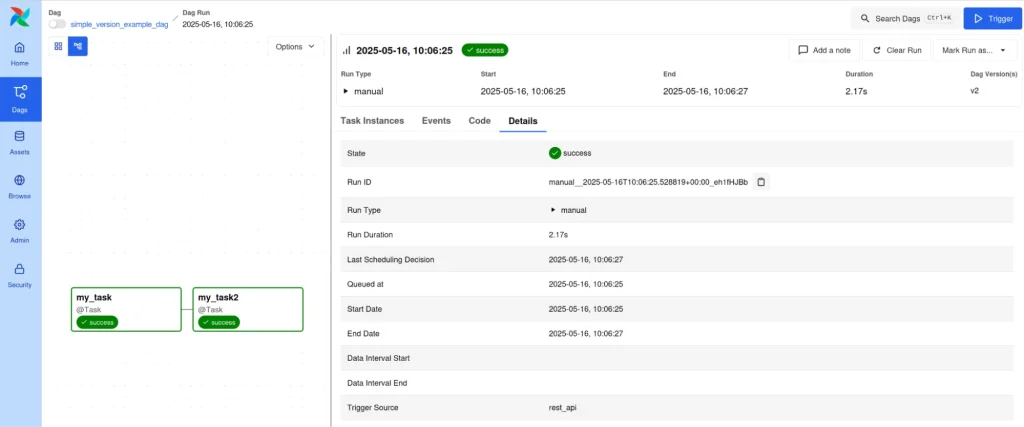

DAG without logical_date

In Airflow version 3.0 it is possible to have DAGs without a logical_date. This option allows to support workloads that do not use intervals more easily, since logical_date was part of the ID of a DAG run, making multiple DAG runs with the same date to be considered as different attempts of the same DAG run. Now, being able to indicate this date in null, they are separate DAG runs.

As an example, here we can see a DAG run with a logical_date:

Here we can see the same DAG without logical_date:

As we can see, the Run ID now has a random string at the end to make it unique, and no Data Interval Start or Data Interval End.

Conclusion

In this space we have addressed some of the changes that have been introduced in Airflow 3.0 and how they allow us to streamline various processes, especially auditing and execution control. Taking into account this series of tips and applying these new functionalities in future Airflow processes, we will be able to get the most out of the tool.

That’s it! If you found this article interesting, we encourage you to visit the Airflow tag with all related posts and to share it on social media. See you soon!