The developers of DBT have set themselves the goal of releasing a new major release every four months, and so far they are delivering. Last October, version 1.9 of this popular framework was released, and has been undergoing corrections. In it, we find some interesting improvements that we are going to analyse below.

The complete changelog of the version can be found on Github. There you will find detailed small fixes as well as new features.

Main new features in DBT 1.9

New selection method called ‘unit_test:’

The ability to add a new selection method called unit_test: has been included to make it easier for users to filter and run exclusively unit tests in DBT. When implemented, the dbt list and dbt test commands should recognise and select only such unit test nodes.

Host parameter in ‘dbt docs server’

A feature is added to allow developers to specify a different link address for the dbt docs serve command, which currently links to 127.0.0.1 by default due to the vulnerability. This has been a popular request in the community, as when setting 127.0.0.1 as the default, some users were having problems with their deployments because they could not easily change this address. This new feature aims to add the option to make it possible to manually specify an alternative link address using a new --host parameter in the command.

Hooks in ‘TestTasks’

Both unit tests and data tests can now be run with adapter-specific settings, such as selecting a custom warehouse in Snowflake before starting the test. This is possible thanks to the incorporation of pre and post-test hooks, which allow the environment to be automatically adjusted according to the requirements of each test. Previously, this feature was only available when running models, but the community can now configure their tests to suit the specific warehouse and resources required, providing greater control and customisation in their testing workflows.

Dynamic naming of Snapshots

Snapshots will be able to automatically generate dynamically created schema and database names, without the need to manually specify target_schema or target_database.

Until this release, snapshots required this information to be explicitly set. This was a bit cumbersome and limited the flexibility when working in environments such as development, test and production. With this improvement, if no target_schema or target_database values are specified, DBT will use the generate_schema_name and generate_database_name macros by default, just like other resources such as models and seeds.

Time spines

This functionality facilitates support for sub-daily granularities, while maintaining query efficiency. With it, we can define a standard granularity standard_granularity_column and add custom granularity custom_granularities_columns with different levels. The idea is that DBT can use the most compatible granularity to optimise performance for each query.

With this configuration, the community will be able to define complex and custom timings directly in their models via YAML, for example:

models:

- name: my_time_spine

description: "my favorite time spine"

time_spine:

standard_granularity_column: date_day # column for the standard grain of your table

custom_granularities_columns: # additional custom granularities for your timespine

- name: fiscal_year

offset: 2

- name: my_other_column

offset: 3

columns:

- name: date_day

granularity: day # set granularity at column-level for standard_granularity_columnSnapshot field configuration

The new version makes it easier to customise the names of meta fields generated in snapshots, such as dbt_valid_from and dbt_valid_to. Until now, if you wanted to rename these columns, you had to do it in a later step of the pipeline. Now, users can specify their own names directly in the snapshot configuration, thus simplifying the management of these fields without the need to create an additional view.

An example would be:

{{

config(

target_database='analytics',

target_schema='snapshots',

unique_key='id',

strategy='timestamp',

updated_at='updated_at',

snapshot_meta_column_names={

dbt_valid_from: grace_valid_from,

dbt_valid_to: grace_valid_to

}

)

}}Checking for ‘graceful failure’ in pre-runs

Now, we can use on-run-start hooks as preliminary checks more effectively. In this update, an on-run-start hook will fail in a controlled way and stop running nodes in the DAG if a validation fails, without stopping DBT execution abruptly or blocking the creation of the run_results.json report.

Snapshot in YAML

Currently, for the definition of a Snapshot we have to generate a file with a projection and add a Jinja configuration. It is proposed to add support for YAML for the definition of a Snapshot. While it is possible that in most cases it would speed up the definition, it is not entirely clear whether flexibility within the projection is lost.

An example definition would be:

snapshots:

- name: orders_snapshot

relation: source('jaffle_shop', 'orders')

config:

schema: snapshots

database: analytics

unique_key: id

strategy: timestamp

updated_at: updated_atThe truth is that there is a lot of discussion about this. In my opinion, there is not much point in keeping the snapshots completely separate from the models, as they look like a model but with a different materialisation. It could be a new materialisation model that has effects only on the DBT engine.

Incremental Microbatch

I saved this feature for last, and I studied the release mainly for this new feature that has been announced since version 1.7.

In this release, the incremental microbatch strategy is introduced, which improves the performance of incremental models by processing data in smaller batches. Instead of processing large volumes in a single batch, multiple partition columns can now be defined, reducing bottlenecks and improving data processing efficiency. In addition, this feature would allow reprocessing of failed batches, so that we would not have to re-generate the entire query in case of a failure due to any runtime problem.

Let’s look at how the microbatch system works. This system is part of the incremental materialisations. Basically, the materialisation is designed to efficiently update the tables in your data warehouse by transforming and loading only new or changed data since the last run. Instead of reprocessing an entire data set each time, incremental models process a smaller number of rows and then add, update or replace those rows in the existing table.

When running this type of model, either on the first run or in incremental updates, DBT splits the processing into several fully independent batches or queries, configured according to the event_time and batch_size parameters.

{{

config(

materialized='incremental',

incremental_strategy='microbatch',

event_time='event_occured_at',

batch_size='day',

lookback=3,

begin='2020-01-01',

full_refresh=false

)

}}

select * from {{ ref('stg_events') }}Here is an example of the configuration consisting of 4 arguments:

event_timeis a timestamp of each record.begindefines the start date of the first batch.batch_sizedetermines the granularity of the batch, that is, day, month, etc.lookbackdefines the number of previous batches to process to capture backlogged records.

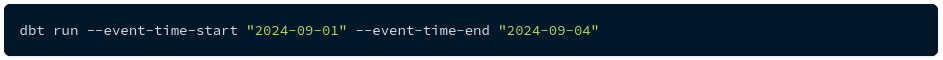

DBT adapts a command so that we can trigger specific periods. This is very useful to perform uploads of historical periods that may have been modified or need some kind of intervention.

What actually happens underneath is that each batch will create a separate query. For example, if the model is run on 1 October, DBT would create separate queries for each day between 28 September and 1 October inclusive (four batches in total).

The query 2024-10-01 would look like this:

select * from (

select * from "analytics"."stg_events"

where my_time_field >= '2024-10-01 00:00:00'

and my_time_field < '2024-10-02 00:00:00'

)

# Depending on its data platform, DBT will choose the most efficient atomic mechanism to insert, update or replace these four batches (2024-09-28, 2024-09-29, 2024-09-30 and 2024-10-01) in the existing tableConclusion

Version 1.9 of DBT introduces improvements that increase flexibility and efficiency in data and, above all, test management. In addition, control over the environment is strengthened and it is easier to run complex tasks with optimised performance.

Finally, we have seen what the microbatching system is all about, which will be very useful for dealing with large amounts of data, especially in cloud warehouses where processing is an important consideration.

And that’s it! If you found this article interesting, we encourage you to visit the Data Engineering category to see all the related posts and to share it on networks. See you soon!