When we imagine a simple programming algorithm, it is logical to think about a succession of instructions that are executed sequentially, where the next instruction will not be executed until the one immediately preceding it has been completed.

However, depending on the nature of the problem, a sequential flow may not be the most desirable option. This is where the concept of concurrency comes in.

In this case, concurrency is nothing more than the ability for a series of instructions to be executed in a disorderly manner without affecting the result. This can lead to parallel execution, that is, the execution of multiple instructions at the same time.

Travel to the Future in Scala

The Scala functional programming language offers an elegant and efficient solution for the management of parallel operations known as Futures.

Basically, in Scala a Future is a type of object that will contain a value that possibly does not yet exist, but is expected to eventually be obtained. It is common to use this type of objects to manage concurrency since it allows us to advance in the flow of instructions while in parallel the expected value is obtained.

Before knowing the different ways to obtain the value of the Future once it is available, we must understand where this characteristic Scala object is executed. The definition of a Future is simple, but we must make sure that an ExecutionContext is associated to it so that it can be executed. Basically, this object is responsible for the execution and will be in charge of launching the computation of our Future in a new thread, in a thread pool or even in the current thread, although the latter is not recommended. Scala provides a default ExecutionContext backed by a ForkJoinPool that manages the number of executable threads. For most implementations this ExecutionContext should be sufficient.

import ExecutionContext.Implicits.global

val inverseFuture : Future[Matrix] = Future {

fatMatrix.inverse()

} // ec is implicitly passedSimple example of initializing a future using the global ExecutionContext provided by default. Example extracted from Scala documentation.

Let’s look to the future

By definition, Futures allow the application not to be in a blocking state while calculating that value. Although Scala also provides functions to generate such a lock and force synchronous execution in cases where it is strictly necessary.

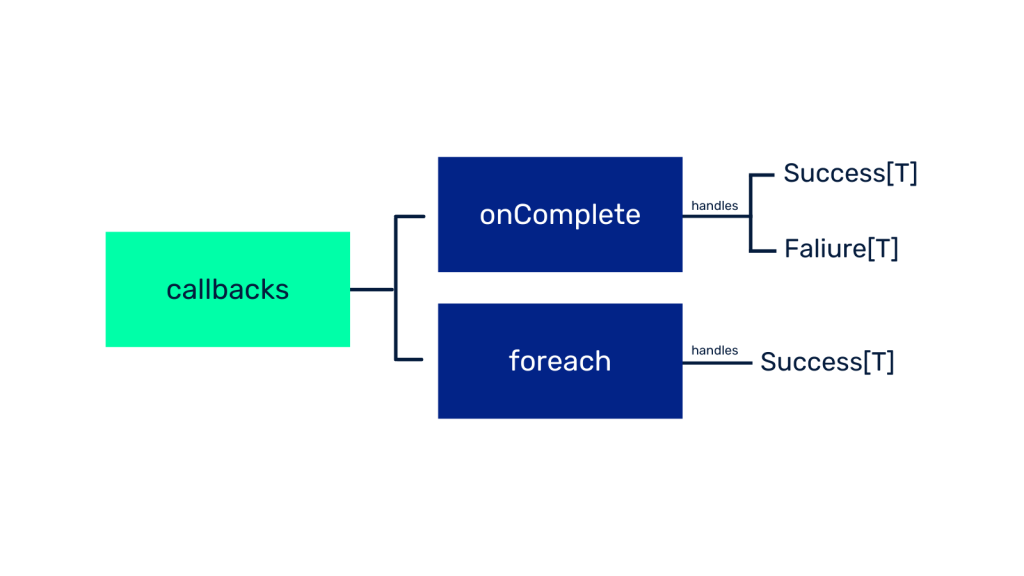

However, the usual use of Futures generates an asynchronous execution of the code. To carry out this type of executions and to obtain its value once it has been calculated Scala uses callbacks or also known as callbacks. We can distinguish two main types of callbacks: the onComplete method and the foreach.

Generally, the result of a Future[T] can be expressed as a Try[T] object that in case of successful value retrieval will become a Succes[T] object and otherwise a Failure[T] object. The onComplete method allows to manage whether the value has been obtained successfully or not. While the foreach only allows to manage the successful results.

Here is a small simple code that exemplifies the use of Futures asynchronously in Scala. We imagine the situation in which we put a pizza to bake and while that process is being carried out we continue working. A simple representation could be the following:

import scala.concurrent.Future

import scala.concurrent.ExecutionContext.Implicits.global

import scala.util.{Success, Failure}

import scala.util.Random.nextInt

def cookPizza(timeCooking: Int = 8): Future[String] = Future {

println("Start cooking pizza...")

Thread.sleep(nextInt(timeCooking)*1000)

"Pepperoni pizza"

}

def work(time: Int = 2): Unit = {

println("Working...")

Thread.sleep(time*1000)

for(_ <- 1 to 3) {

println("Still working...")

Thread.sleep(time*1000)

}

print("Work finished! Time to eat!")

}

// Possible main

val futurePizza: Future[String] = cookPizza()

futurePizza.onComplete {

case Success(pizza) => println(s"Your $pizza is done!")

case Failure(_) => println("Oh! There was an error cooking your pizza.")

}

work()Partial example of the code with asynchronous fetching of Futures.

A possible output of this code could be this:

Start cooking pizza...

Working...

Still working...

Your Pepperoni pizza is done!

Still working...

Still working...

Work finished! Time to eat!As we can see, in this execution the process of finishing the pizza ended after the first iteration of the work() method. But the printing of the message was done asynchronously, that is, non-blocking with the method that simulates the work.

There is also a last way to get the value of a Future and that is through the for-comprehensions or the map() function. Simply put, this function upon receiving a future and a function that maps its value, produces a new future completed with the mapped value once the original Future has been correctly obtained. That is, it transforms the potential value of the previous Future into a completed value.

Conclusion

In short, the use of Futures in Scala is a simple but at the same time very powerful way to manage concurrency and parallelism. Moreover, the functionalities mentioned in this post are not the only ones and there is a great variety of solutions that combine the explained concepts.

The application of this type of objects is very common in cases where it is necessary to query other systems, perform heavy calculations or even access external repositories so that the execution is not blocked to obtain the data.

For more information we encourage you to consult the Scala documentation on Futures and keep an eye on the next Damavis posts.

If you found this article interesting, visit the Data Engineering category of our blog to see posts similar to this one and share it in networks with all your contacts. Don’t forget to mention us to let us know your opinion @Damavisstudio. See you soon!